Beidi Chen, a Postdoc Researcher at Stanford, was the guest of LightOn’s 13th AI Meetup and presented her work on SLIDE&MONGOOSE: LSH Frameworks for Efficient Neural Networks Training⚡ to appear at ICLR 2021.

The 📺 recording of the meetup is on LightOn’s Youtube channel. Subscribe to the channel and subscribe to our Meetup to get notified of the next videos and events!

We have previously talked about co-designing hardware and software ↔️ to unlock the next generation of machine learning models 🤖, and this talk fits perfectly in that narrative.

The underlying idea is to rely on Locality Sensitive Hashing (LSH) to trade accuracy for efficiency in performing the giant matrix multiplications that we find in attention layers and large output layers for extreme classification problems. However, a naive application of LSH introduces high overhead and is therefore not useful in practice.

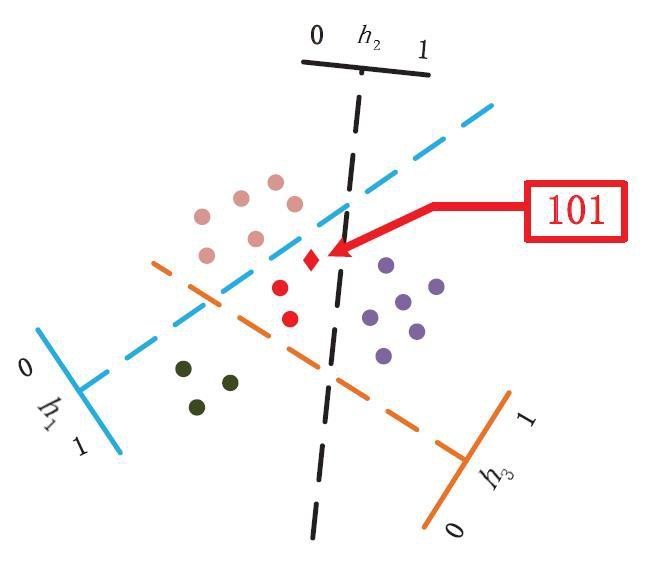

Locality Sensitive Hashing (LSH) is an approximate nearest neighbor search algorithm that maps similar items in the same buckets by dividing the space with random hyperplanes.

Locality Sensitive Hashing (LSH) is an approximate nearest neighbor search algorithm that maps similar items in the same buckets by dividing the space with random hyperplanes.

The main insight 🔎 behind MONGOOSE is that most weights do not change enough during training to trigger LSH updates: changes are large at the beginning but plateau early, with only 1–5% of hash codes changing per epoch on average. If we had an oracle 🔱, we could reduce the frequency of LSH update by a factor of 100x.

The solution it to maintain a low-cost structure that acts as a scheduler for the updates: this structure holds two copies of the network parameters that differ only for a few coordinates. When the difference between the two copies exceeds some value, or when there are many coordinates close to the decision boundary, the hash codes are updated. The last hypothesis is the key trick that allows us to leverage efficient parallelism.

The second trick is to introduce Learnable LSH to better separate the data in the nearest neighbor search. Every time the scheduler triggers an update, a training signal based on a triplet loss is computed to train the LSH parameters.

Since the update time is related to the query time, the overall update overhead can be reduced even if a training phase is introduced, provided that the scheduler performs well.

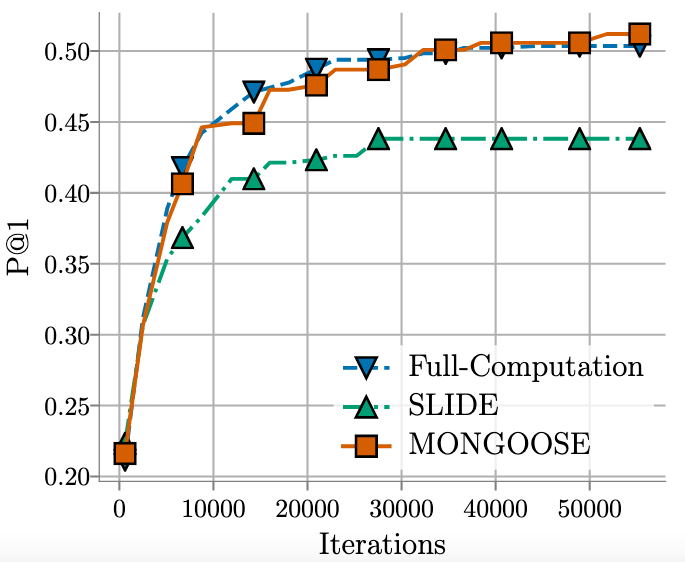

P@1 versus training iteration on Wiki-325k from “MONGOOSE: A Learnable LSH Framework for Efficient Neural Network Training”.

MONGOOSE is 5–20x faster and up to 4x more memory efficient than the baseline, without sacrificing accuracy on extreme classification and NLP tasks.

If you want to reproduce these results, expand them or try out your latest idea, you can register to the LightOn Cloud for a Free Trial or apply to the LightOn Cloud for Research Program!

Summary of LightOn AI meetup #13