Large language models have reached a degree of sophistication that is surprising even to experts. They can be instructed via natural language, and respond in kind. Since this interaction is so familiar to us, and since the generated output seemingly demonstrates understanding and creativity these models have to measure up to how a real human would respond. And this does not only entail the grammatical correctness of the produced text, or its factuality, but also a degree of familiarity and specific knowledge that one would expect from a native speaker. This is reminiscent of the visual “uncanny valley”, where small deviations from a human semblance evoke a feeling of uneasiness. Here the uncanny valley is of linguistic and cultural nature. Up until now the most used large language models have been trained with a corpus mainly comprised of English. A common approach to make them available in other languages is to use translation. In this blog post we establish that this can lead to a loss or misrepresentation of important knowledge and cultural idiosyncrasies. With specific examples we show that our natively trained French model not only produces quality text, but also avoids these pitfalls

The weight of a cat

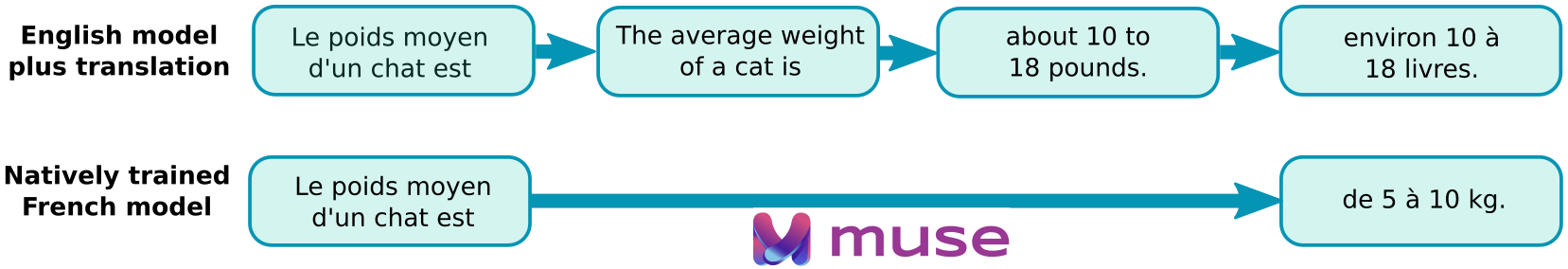

To illustrate this we start with a simple example, where we ask for the average weight of a cat in French.

For the English trained model we first have to translate from French to English, then give this translated prompt to the model, which computes the answer. The answer is then translated back to French. The native French model skips the translation steps. This simple example shows that, although the answer generated by the English model is factually correct, it gave the weight of the cat in pounds (”livres” in French), a unit that is not commonly used in France. This is in contrast to the answer generated by our native French model via our Muse API, which gives the correct answer using the correct unit in this context.

This example is part of a larger class where the translation layer introduces mistakes. Generated text that contains units (imperial vs. metric), currency (USD vs EUR), temperature (Fahrenheit vs. Celsius), distances (miles vs. kilometres) or height (feet and inches vs. metres and centimetres) also regularly get mixed up. The underlying reason is that the English model can only draw from what it has seen during its training. It therefore tends to use imperial units and Dollars for currency. This is then coupled with translation that is very good in producing grammatically correct French, but lacks the awareness to also change units and currencies to those commonly used in the target language.

In the following we indicate the original French prompt with ✍️🇫🇷. Where necessary the output generated by our French model will be preceded by 🤖🇫🇷 , and that of the English model by 🤖🇺🇸. An English translation of prompts and generated texts is provided next to the French text.

Another fun example in the same vein is related to pop culture, specifically movie titles. An English model plus translation completes the prompt.

✍️🇫🇷 En 2009, Todd Phillips produit et réalise un film mettant en scène Bradley Cooper, Ed Helms, Zach Galifianakis et Justin Bartha qui s'appelle

In 2009, Todd Phillips produced and directed a film starring Bradley Cooper, Ed Helms, Zach Galifianakis and Justin Bartha called

with “The Hangover”. Our native French model completes the same prompt with the contextually correct answer “Very Bad Trip”.

Factual inaccuracies

A second class of examples generates text that is factually incorrect when taking into account the regional and cultural context. To illustrate this consider the following prompt:

✍️🇫🇷 La consommation annuelle moyenne d'électricité résidentielle est de

The average annual residential electricity consumption is

The English model tends to return values around 10 000 kWh, whereas the answers of our native French model gives are more centered around 5 000 kWh. The former is correct for North America, where most of the training data scraped from the internet likely comes from. But it is false in the French context, where due to regional, historical, cultural and political differences the latter answer is correct.

✍️🇫🇷 La limitation de vitesse sur les autoroutes est

The speed limit on the motorways is

The English model gives 65 mph, or about 105 km/h. Our French model gives 130 km/h.

We found example prompts including varied topics such as the legal driving age, universal health care, the legal age to consume alcohol and the financial capital of the country that provoke this bias.

Cultural subtleties

It is easy to check for factual accuracy. But culture is much more “fuzzy”, and examples may not fall neatly into separate categories of “right” and “wrong”. Moreover, selecting only English texts for the training corpus of a language model may prefer the United States, but it will also be influenced by other native English speaking countries and regions, and by English texts written by non-native speakers. Similarly, French is not only spoken in France. Notably, Canada has both French and English speaking populations.

That being said, we do find examples that show reproducible and defendable differences. For example:

✍️🇫🇷 Le fromage est-il fabriqué à partir de lait non pasteurisé ?

Is the cheese made from unpasteurized milk?

🤖🇺🇸 Si c'est le cas, il peut être chargé de bactéries, et il peut être dangereux pour les personnes allergiques aux produits laitiers. Le fromage non pasteurisé peut provoquer de graves problèmes de santé chez les personnes allergiques, comme un choc anaphylactique. La meilleure façon d'éviter ce risque est d'éviter le fromage non pasteurisé.

If so, it may be loaded with bacteria, and it may be dangerous for people with dairy allergies. Unpasteurized cheese can cause serious health problems in allergy sufferers, such as anaphylactic shock. The best way to avoid this risk is to avoid unpasteurized cheese.

🤖🇫🇷 Oui, mais pas n’importe lequel. Le fromage est fait à partir de lait cru soigneusement sélectionné, provenant de vaches nourries à l’herbe dont le lait est cru, non chauffé et non homogénéisé. Le lait cru est un aliment vivant qui contient des bactéries vivantes et des levures.

Yes, but not just any. The cheese is made from carefully selected raw milk from grass-fed cows whose milk is raw, unheated and unhomogenized. Raw milk is a living food that contains live bacteria and yeast.

Answers from the English trained model mirror the American attitude towards non-pasteurized milk: they focus on the possibility of contamination from bacteria, and the dangers in particular to pregnant women and people with allergies. Our French model generates text that is much more in line with the French understanding and attachment to cheese, conveying a much warmer description of the same.

For another example we turn to musical preferences:

✍️🇫🇷 Sans aucun doute, l'un des plus grands interprètes du XXe siècle a été

Without question, one of the greatest performers of the 20th century was

The English model consistently responds with Elvis Presley, with a little bit of Michael Jackson mixed in. Our French model generated Marilyn Horne, Rudolf Nureyev, Jean Sablon, Dietrich Fischer-Dieskau and others. Again, we don’t suggest that one is right and the other is not. Rather it is another example of how cultural preferences are deeply ingrained within the language models.

Fin

We would like to close this blog post in the most Frenchest way possible: in the style of the ending of a formal letter.

✍️🇫🇷 Pour terminer une lettre très formelle, vous pouvez écrire :

To end a very formal letter, you can write:

🤖🇺🇸 Sincèrement vôtre

Sincerely yours

🤖🇫🇷 Je vous prie d'agréer, Monsieur le Président, l'expression de mes sentiments les plus respectueux.

Please accept, Mr President, the expression of my most respectful sentiments.

What a beautiful language 🥰

In this blog post we have demonstrated the advantages of natively trained large language models. With native models there is no need for additional translation layers, removing complexity and errors introduced by translation. They also draw from a culturally distinct training corpus, which generates text that is more in line with what a user would expect. We believe that in order to pass through the linguistic and cultural uncanny valley a language model needs to be trained natively. At LightOn we therefore offer our natively trained VLM-4 models in five languages: French, Italian, German, Spanish and English. After signup you can test them out with our free Developer plan, either on a playground or via our Muse API. For more information please have a look at Muse website.

Of Cats and Cheese: